- EfficientViT: 9x faster mobile semantic segmentation.

- Innovative attention maps enhance pixel relationships.

- Hardware-friendly design for VR and autonomous vehicles.

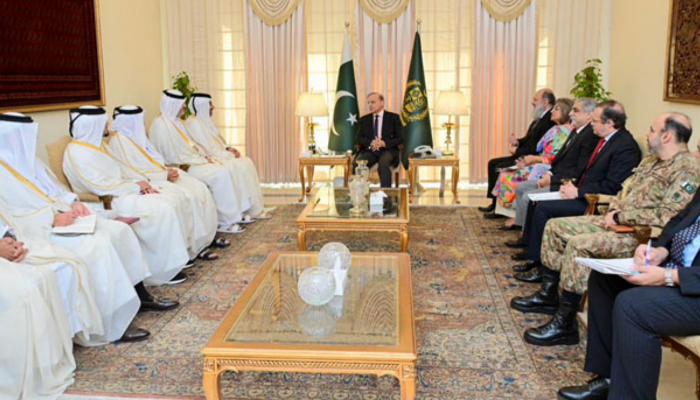

Researchers from MIT, in collaboration with the MIT-IBM Watson AI Lab and other institutions, have unveiled an innovative computer vision model designed to significantly reduce the computational complexity of semantic segmentation.

This breakthrough allows the model to perform real-time semantic segmentation efficiently on devices with limited hardware resources, such as the onboard computers used in autonomous vehicles.

Semantic segmentation, a crucial task for autonomous vehicles, involves the rapid and accurate identification of objects in a scene, ranging from stationary delivery trucks to rapidly approaching cyclists. Traditionally, state-of-the-art models struggled to process high-resolution images in real time due to the quadratic growth in computational requirements as image resolution increased.

The MIT team introduced a novel building block for semantic segmentation models that maintains the accuracy of existing models but with linear computational complexity and hardware-efficient operations. This development has led to a new model series, named EfficientViT, which can perform up to nine times faster than previous models when deployed on mobile devices while maintaining or surpassing their accuracy.

The key innovation in the EfficientViT model series lies in its approach to building attention maps, which capture the relationships between pixels in an image. Unlike existing models that rely on nonlinear similarity functions, the MIT researchers employed a linear similarity function, allowing them to streamline the computation without sacrificing the global receptive field. However, this linear approach tends to lose some local information, leading the researchers to incorporate additional components to regain accuracy while minimizing added computation.

EfficientViT’s hardware-friendly architecture makes it suitable for various devices, from virtual reality headsets to autonomous vehicle edge computers, and it can also be applied to other computer vision tasks such as image classification.

In tests using datasets for semantic segmentation, EfficientViT outperformed popular vision transformer models, running up to nine times faster on Nvidia GPUs while maintaining or improving accuracy. This breakthrough not only benefits autonomous vehicles by enabling real-time decision-making but also holds promise for enhancing efficiency in other high-resolution computer vision applications, including medical image segmentation.

The researchers intend to extend the application of this technique to accelerate generative machine-learning models and further scale EfficientViT for various vision-related tasks. Experts in the field have praised this research for its potential to drive efficient AI computing in real-world applications and enhance image quality in areas like video games and cloud-based services.

[embedpost slug=”/iri-proposes-changes-to-naic-model-on-insurers-use-of-ai/”]

To stay informed about current events, please like our Facebook page https://www.facebook.com/BOLUrduNews/.

Follow us on Twitter https://twitter.com/bolnewsurdu01 and stay updated with the latest news.

Subscribe to our YouTube channel https://bit.ly/3Tv8a3P to watch news from Pakistan and around the world